일단 필요한 모듈들 import 해주기 !

import cv2

import numpy as np

from matplotlib import pyplot as plt

YOLO 로드

net = cv2.dnn.readNet("yolov3.weights", "yolov3.cfg")

classes = []

with open("coco.names", "rt",encoding = "UTF8") as f:

classes = [line.strip() for line in f.readlines()]

layer_names = net.getLayerNames()

output_layers = [layer_names[i-1] for i in net.getUnconnectedOutLayers()]

colors = np.random.uniform(0, 255, size=(len(classes), 3))여기서 필요한 파일들은 아래 글에 나와있다 !

[DARKNET] 다크넷으로 YOLOV3 돌리는 법

1. 필요한 파일 다운 name cfg weight https://pjreddie.com/darknet/yolo/ YOLO: Real-Time Object Detection YOLO: Real-Time Object Detection You only look once (YOLO) is a state-of-the-art, real-time ob..

hjkim5004.tistory.com

이미지 로드

img = cv2.imread("images/teddy_bear.jpg")

img = cv2.resize(img, None, fx=0.4, fy=0.4)

height, width, channels = img.shape

detect ( + 이미지 blob으로 )

blob = cv2.dnn.blobFromImage(img, 0.00392, (416, 416), (0, 0, 0), True, crop=True)

net.setInput(blob)

outs = net.forward(output_layers)

class_ids = []

confidences = []

boxes = []

for out in outs:

for detection in out:

scores = detection[5:]

class_id = np.argmax(scores)

if scores[class_id] != 0:

print(class_id)

print(scores[class_id])

confidence = scores[class_id]

if confidence > 0.6:

center_x = int(detection[0] * width)

center_y = int(detection[1] * height)

#print(center_x,center_y)

w = abs(int(detection[2] * width))

h = abs(int(detection[3] * height))

x = abs(int(center_x - w / 2))

y = abs(int(center_y - h / 2))

boxes.append([x, y, w, h])

confidences.append(float(confidence))

class_ids.append(class_id)

non maximum suppression

class_list = list(set(class_ids))

idxx = []

indexes=[]

for i in range(len(class_list)):

max_v=0

for j in range(len(class_ids)):

if class_ids[j] == class_list[i]:

if max_v < confidences[j]:

max_v = confidences[j]

idxx.append(j)

indexes.append(idxx[len(idxx)-1])

print(class_ids)ㄴ 한 object에 대해 여러 바운딩 박스가 생기면 그 중 정확도가 제일 높은 것을 제외한 모든 중복 바운딩 박스 없애기 !

노이즈 제거

indexes = cv2.dnn.NMSBoxes(boxes, confidences, 0.5, 0.4)

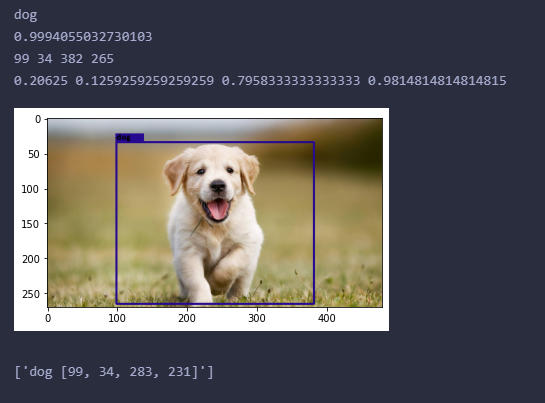

class name, bounding box, 좌표 출력

font = cv2.FONT_HERSHEY_PLAIN

det_foods = []

for i in range(len(boxes)):

if i in indexes:

x, y, w, h = boxes[i]

class_name = classes[class_ids[i]]

print(class_name)

print(confidences[i])

label = f"{class_name} {boxes[i]}"

det_foods.append(label)

color = colors[i]

print(x,y,x+w,y+h)

nx = (x + w) / width

ny = (y + h) / height

if nx > 1:

nx = 1

if ny > 1:

ny =1

cv2.rectangle(img, (x, y), (x + w, y + h), color, 2)

print(x/width,y/height,nx,ny)

cv2.rectangle(img, (x - 1, y), (x + len(class_name)*13, y-12), color, -1)

cv2.putText(img, class_name, (x, y - 4), cv2.FONT_HERSHEY_COMPLEX_SMALL, 0.5, (0, 0, 0), 1,cv2.LINE_AA)

b,g,r = cv2.split(img)

image2 = cv2.merge([r,g,b])

plt.imshow(image2)

#imShow(img)

plt.show()

print(det_foods)

tiny가 아닌 yolov3로 돌렸을 때 !

tiny로 돌렸을 때 !

tiny가 일반 yolov3보다 정확도도 꽤 낮고 인식을 못 하는 경우도 있었다

's t u d y . . 🍧 > AI 앤 ML 앤 DL' 카테고리의 다른 글

| [YOLOv5] Custom Dataset으로 Pothole detection (0) | 2022.10.07 |

|---|---|

| [CNN] Custom Dataset으로 Pothole detection (1) | 2022.10.05 |

| [DARKNET] 다크넷으로 YOLOV3 돌리는 법 (0) | 2022.10.02 |

| [YOLOv3] Object detection (1) | 2022.09.30 |

| [OpenCV] 실시간 영상처리 (0) | 2022.09.28 |